#include <leapfrog.h>

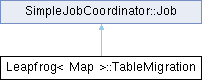

Inheritance diagram for Leapfrog< Map >::TableMigration:

Inheritance diagram for Leapfrog< Map >::TableMigration:Classes | |

| struct | Source |

Public Member Functions | |

| void | destroy () |

| Source * | getSources () const |

| bool | migrateRange (Table *srcTable, quint64 startIdx) |

| virtual void | run () override |

| TableMigration (Map &map) | |

| virtual | ~TableMigration () override |

Public Member Functions inherited from SimpleJobCoordinator::Job Public Member Functions inherited from SimpleJobCoordinator::Job | |

| virtual | ~Job () |

Static Public Member Functions | |

| static TableMigration * | create (Map &map, quint64 numSources) |

Public Attributes | |

| Table * | m_destination {nullptr} |

| Map & | m_map |

| quint64 | m_numSources {0} |

| Atomic< bool > | m_overflowed |

| Atomic< qint64 > | m_unitsRemaining |

| Atomic< quint64 > | m_workerStatus |

Detailed Description

class Leapfrog< Map >::TableMigration

Definition at line 100 of file leapfrog.h.

Constructor & Destructor Documentation

◆ TableMigration()

|

inline |

Definition at line 115 of file leapfrog.h.

◆ ~TableMigration()

|

inlineoverridevirtual |

Definition at line 133 of file leapfrog.h.

Member Function Documentation

◆ create()

|

inlinestatic |

Definition at line 119 of file leapfrog.h.

References Leapfrog< Map >::TableMigration::m_numSources, Leapfrog< Map >::TableMigration::m_overflowed, Leapfrog< Map >::TableMigration::m_unitsRemaining, Leapfrog< Map >::TableMigration::m_workerStatus, Atomic< T >::storeNonatomic(), and Leapfrog< Map >::TableMigration::TableMigration().

◆ destroy()

Definition at line 137 of file leapfrog.h.

References Leapfrog< Map >::Table::destroy(), Leapfrog< Map >::TableMigration::getSources(), Leapfrog< Map >::TableMigration::m_numSources, Leapfrog< Map >::TableMigration::Source::table, and Leapfrog< Map >::TableMigration::~TableMigration().

◆ getSources()

Definition at line 148 of file leapfrog.h.

◆ migrateRange()

| bool Leapfrog< Map >::TableMigration::migrateRange | ( | Table * | srcTable, |

| quint64 | startIdx ) |

Definition at line 358 of file leapfrog.h.

References Leapfrog< Map >::CellGroup::cells, Atomic< T >::compareExchange(), Atomic< T >::compareExchangeStrong(), Leapfrog< Map >::Table::getCellGroups(), Leapfrog< Map >::Cell::hash, Leapfrog< Map >::insertOrFind(), Leapfrog< Map >::InsertResult_AlreadyFound, Leapfrog< Map >::InsertResult_Overflow, KIS_ASSERT_RECOVER_NOOP, Atomic< T >::load(), Leapfrog< Map >::TableMigration::m_destination, Relaxed, Leapfrog< Map >::Table::sizeMask, Atomic< T >::store(), Leapfrog< Map >::TableMigrationUnitSize, and Leapfrog< Map >::Cell::value.

◆ run()

Implements SimpleJobCoordinator::Job.

Definition at line 458 of file leapfrog.h.

References AcquireRelease, Leapfrog< Map >::TableMigration::create(), Leapfrog< Map >::Table::create(), Leapfrog< Map >::TableMigration::destroy(), Leapfrog< Map >::Table::getNumMigrationUnits(), Leapfrog< Map >::TableMigration::getSources(), Leapfrog< Map >::Table::jobCoordinator, KIS_ASSERT_RECOVER_NOOP, SimpleJobCoordinator::loadConsume(), Leapfrog< Map >::TableMigration::m_destination, Leapfrog< Map >::TableMigration::m_numSources, Leapfrog< Map >::TableMigration::m_unitsRemaining, Leapfrog< Map >::Table::mutex, Relaxed, source(), Leapfrog< Map >::TableMigration::Source::sourceIndex, Atomic< T >::storeNonatomic(), SimpleJobCoordinator::storeRelease(), Leapfrog< Map >::TableMigration::Source::table, and Leapfrog< Map >::TableMigrationUnitSize.

Member Data Documentation

◆ m_destination

Definition at line 109 of file leapfrog.h.

◆ m_map

| Map& Leapfrog< Map >::TableMigration::m_map |

Definition at line 108 of file leapfrog.h.

◆ m_numSources

| quint64 Leapfrog< Map >::TableMigration::m_numSources {0} |

Definition at line 113 of file leapfrog.h.

◆ m_overflowed

Definition at line 111 of file leapfrog.h.

◆ m_unitsRemaining

Definition at line 112 of file leapfrog.h.

◆ m_workerStatus

Definition at line 110 of file leapfrog.h.

The documentation for this class was generated from the following file:

- libs/image/3rdparty/lock_free_map/leapfrog.h